We here at The Pluralist Network often times enjoy the short writings of Prof. Ethan Mollick.

In particular we found the following tweet and diagram very helpful:

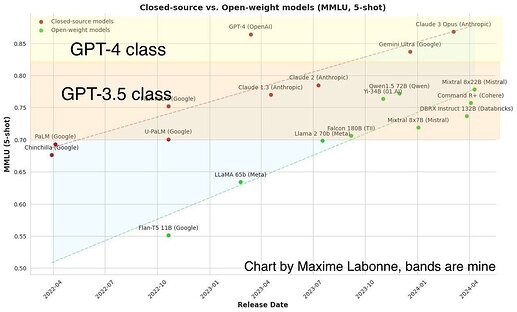

Another such person, whose posts and writings we enjoy is Maxime Labonne. Here is one of the most recent favorites:

Ezra Klein interviews Dario Amodei (Anthropic)

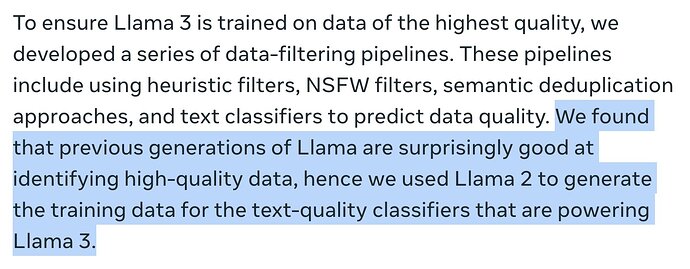

Another interesting person to follow is Kyle Corbitt, who analyses of the emerging open source LLMs. One such interesting recent observation is the fact that Llama 2 was used to… train Llama 3 (oversimplifying here).

Another interesting perspective: Transcript: Ezra Klein Interviews Dario Amodei (Anthropic) - The Ezra Klein Show, April 12, 2024

Notes:

- scaling laws - " they’re not laws. They’re observations. They’re predictions." →

the more computer power and data you feed into A.I. systems, the more powerful those systems get — that **the relationship is predictable**, and more, that **the relationship is exponential**. - A.I. developers say is that the power of A.I. systems is on this kind of curve, that it has been increasing exponentially

- Amodei led the team at OpenAI that created GPT-2, that created GPT-3. He then left OpenAI to co-found Anthropic

- “we have done shows with Sam Altman, the head of OpenAI, and Demis Hassabis, the head of Google DeepMind. And it’s worth listening to those two if you find this interesting”

- for guest suggestions — ezrakleinshow@nytimes.com

- Dario: “And then, all of a sudden, when ChatGPT came out, it was like all of that growth that you would expect, all of that excitement over three years, broke through and came rushing in.”

- Dario: (2024-04-12) “I think all of that is coming in the next, I would say — I don’t know — three to 18 months, with increasing levels of ability.”

- Dario: “…today’s models cost of order $100 million to train, plus or minus factor two or three”

- Dario: “The models that are in training now and that will come out at various times later this year or early next year are closer in cost to $1 billion.”

- Dario: “…in 2025 and 2026, we’ll get more towards $5 or $10 billion”

- Ezra Klein: “…you all are getting billions of dollars from Amazon. OpenAI is getting billions of dollars from Microsoft. Google obviously makes its own”

- Ezra: “…the Saudis, are creating big funds to invest in the space” - in 1 or 2 years - $10B, then $100B

- Dario: “…there’s a blooming ecosystem of startups there that don’t need to train these models from scratch. They just need to consume them and maybe modify them a bit.”

- Dario: “…coding agents will advance substantially faster than agents that interact with the real world”

- Dario: "We’re already working with, say, drug discovery scientists, companies like Pfizer or Dana-Farber Cancer Institute, on helping with biomedical diagnosis, drug discovery. "

- Dario: “…AlphaFold, which I have great respect for…”

- Dario: “…by making the models smarter and putting them in a position where they can design the next AlphaFold…”

- Dario: “…we’ve tried to ban the use of these models for persuasion, for campaigning, for lobbying, for electioneering…”

- Dario: “…the largest version of our model is almost as good as the set of humans we hired at changing people’s minds…”

- Dario: “If I go on the internet and I see different comments on some blog or some website, there is a correlation between bad grammar, unclearly expressed thoughts and things that are false, versus good grammar, clearly expressed thoughts and things that are more likely to be accurate. – A.I. unfortunately breaks that correlation…”

- Harry Frankfurt, the late philosopher’s book, “On Bullshit” – bullshit is actually more dangerous than lying because it has this kind of complete disregard for the truth – the liar has a relationship to the truth – The bullshitter doesn’t care..

- Dario: “…many people have the intuition that the models are sort of eating up data that’s been gathered from the internet, code repos, whatever, and kind of spitting it out intelligently, but sort of spitting it out. And sometimes that leads to the view that the models can’t be better than the data they’re trained on or kind of can’t figure out anything that’s not in the data they’re trained on. — we’re seeing early indications that it’s false.”

- Occam’s razor – Suppose an event has two possible explanations. The explanation that requires the fewest assumptions is usually correct. Another way of saying it is that the more assumptions you have to make, the more unlikely an explanation.

- Dario: A.I. safety levels (A.S.L.) → biosafety levels, classify how dangerous a virus is…

- ASL-1 represents systems with no meaningful catastrophic risk

- (current) – ASL-2 applies to systems that exhibit early signs of dangerous capabilities but whose outputs are not yet practically useful.

- ASL-3 includes systems that significantly increase the risk of catastrophic misuse or exhibit low-level autonomous capabilities.

- ASL-4 and above, have not been fully defined but are expected to involve a greater potential for misuse and autonomy.

- Dario: Anthropic. OpenAI, DeepMind at Google, Microsoft all have (or are working on) similar A.S.L. frameworks

- Dario: Bills have been proposed that look a little bit like our responsible scaling plan.

- R.S.P. → Responsible Scaling Plan

- Dario: the benefits of this technology are going to outweigh its costs

- Ezra: Microsoft, on its own, is opening a new data center globally every three days → federal projections for 20 new gas-fired power plants in the U.S. by 2024 to 2025 (Subscribe to read)

- Dario: “…whatever the energy was that was used to mine the next Bitcoin… unable to think of any useful thing that’s created by that.”

- Dario: “We’re hoping to partner with ministries of education in Africa, to see if we can use the models in kind of a positive way for education, rather than the way they may be used by default.” (As we are reading the transcript for this interview from a couch in Jinja, Uganda)

- Dario: everyone agrees the models shouldn’t be verbatim outputting copyrighted content → we don’t think it’s just hoovering up content and spitting it out, or it shouldn’t be spitting it out → our position that that is sufficiently transformative, and I think the law will back this up, that this is fair use.

- Dario: as A.I. systems become capable of larger and larger slices of cognitive labor, how does society organize itself economically?

- Dario: transition from an agrarian society to an industrial society and the meaning of work changed

- Dario: (on how to pay web-sites with ads, when AI is surfing) “There’s no reason that the users can’t pay the search A.P.I.s, instead of it being paid through advertising, and then have that propagate through to wherever the original mechanism is that paid the creators of the content. So when value is being created, money can flow through.”

- Ezra: It [ai] seems better at coding than it is at other things.

- Dario: Teach your children to be adaptable, to be ready for a world that changes very quickly.

- Ezra: Do I want my kids learning how to use A.I. or being in a context where they’re using it a lot, or actually, do I want to protect them from it as much as I possibly could so they develop more of the capacity to read a book quietly on their own or write a first draft?

- Dario: find a workflow where the thing complements you

- Dario recommends books:

- “The Making of the Atomic Bomb,” Richard Rhodes

- “The Expanse” series of books

- “The Guns of August,” - history of how World War I started

Quoted Financial Times article: Subscribe to read

Must-listen podcasts (Ezra Klein Show)

- Transcript: Ezra Klein Interviews Sam Altman - June 11, 2021

- Transcript: Ezra Klein Interviews Demis Hassabis - July 11, 2023

- Transcript: Ezra Klein Interviews Dario Amodei - April 12, 2024